|

CRI ADX

Last Updated: 2025-05-07 13:34 p

|

|

CRI ADX

Last Updated: 2025-05-07 13:34 p

|

Latency is the time between the triggering of a sound and when it actually starts to play back through the speaker. Latency may have various causes such as the server frequency or the size of the mixing buffer.

The biggest latency issues occur when the sound mixing buffer is huge or when there are multiple mixing layers.

On smartphones and tablets, the system also mixes the sounds of the application with other data such as voice on the phone,

so the mixing buffer becomes larger or has multiple layers.

ADX supports low-latency playback for Android OS, but the number of simultaneous playbacks and the sampling rate are limited.

ADX's default settings include a headroom (=latency) so that sound is not interrupted. If the target specifications are clear, you may be able to adjust the size of the mixing buffer accordingly.

ADX periodically adjusts the decoding or streaming buffer and updates the sound parameters.

For example, when the server frequency is 60 fps, the process happens at intervals of 16 msec, so a delay of at least 16.666 msec occurs.

The thread that performs this processing is obviously important, so it is configured with a higher priority than the application.

You need to consider the interval of the sound processing separately from the game processing.

Otherwise, various problems such as interruptions of sound, noises, short loops or fluctuation of the playback timing may occur.

In ADX, the decoding is done during the available time within the server cycle.

The sound may not be played back as intended when a process which heavily blocks resources is running in the background.

It is recommended to avoid running a process which locks the hardware resources such as the file system.

Please note that the file system and movie playback (Sofdec) in CRI were designed so that they do not block ADX.

Decoding is done in the smallest processing unit possible (such as 128 samples).

A control more granular than this is basically not possible.

The mixing of multiple decoded sounds is performed in buffers with sizes corresponding to durations from 60 to 130 msec.

Although the buffer size can be shortened if the machine performance is high, it often makes the process unstable.

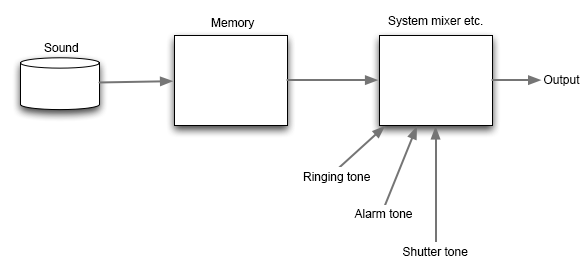

Mixing with the sounds of other applications may be performed at the OS level on devices such as in Android or iPhone.

This causes latency issues since the processing buffer sizes in these cases are typically set to longer values.

Even with the low latency playback in Android, there is still a latency of about 100 msec.

On iOS, the latency is about 10 msec, so creating a musical instrument app or a music game is relatively easy.

But on Android, you need to design the game from scratch considering the latency.

The mixing latency depends on the device or OS.

The latency of the mixing performed outside the middleware may be large. On PC, you can shorten that latency significantly by using Wasapi.

Fore more information, refer to the SDK document for each target.

When playing a sound corresponding to a button being pressed or playing an OS built-in button sound etc., the process is usually implemented to prevent repetition.

Therefore, it may be possible that the response is good for the first time, but that there is some latency for the second and subsequent playbacks.

On some devices, there may also be an extra latency introduced while the OS propagate the information that the screen has been touched towards the application.